JavaScript can be an SEO pitfall for many websites. While using JS is not bad for SEO in itself, however bad implementation often leads to multiple crawling and rendering issues.

Whether you're managing a single-page application, a complex web app, or a content-rich site, this checklist will help you navigate the proper SEO implementation of JavaScript.

JavaScript SEO Diagnostic & Assessment Checklist

- Check JavaScript Reliance

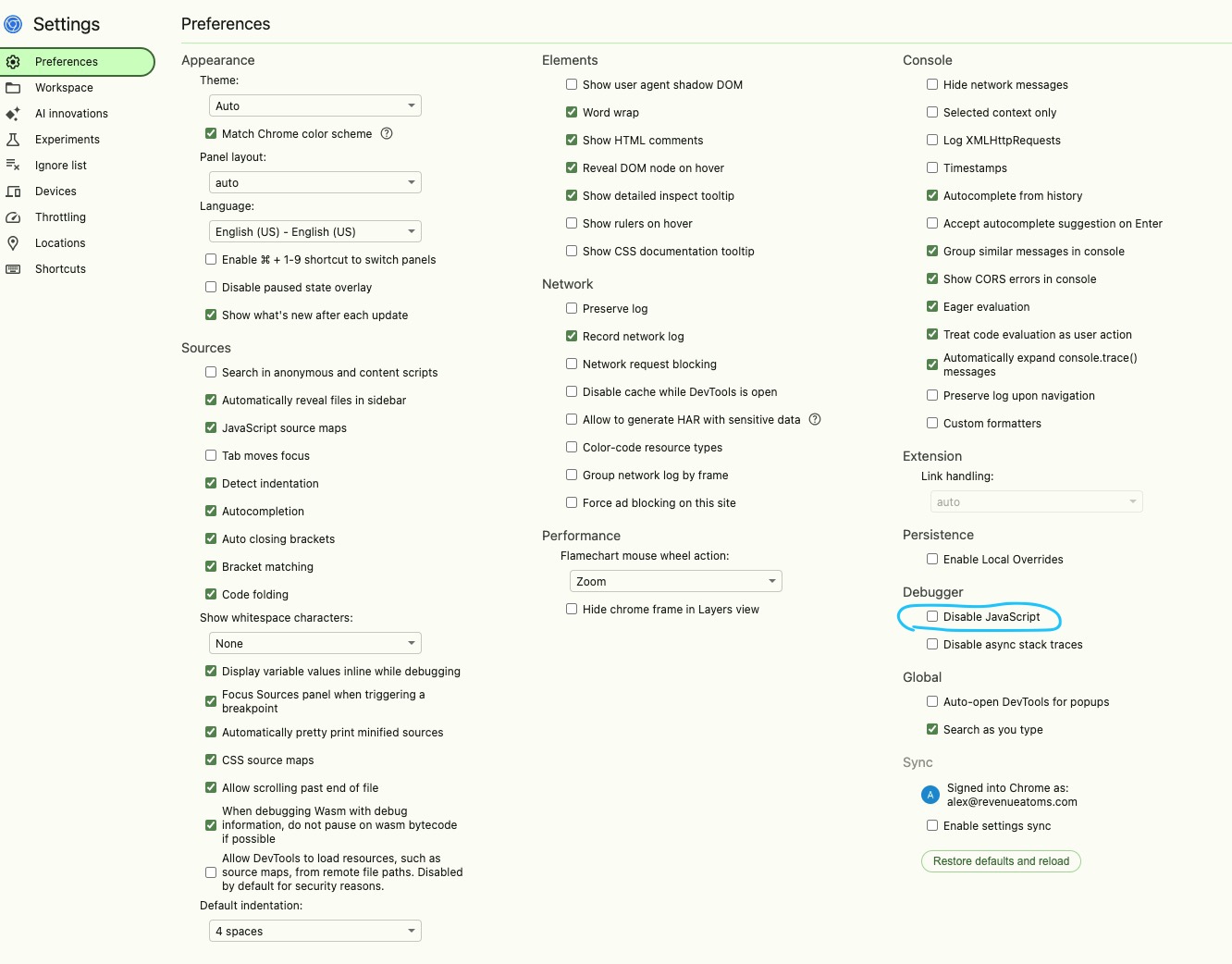

- Manual Check: Disable JavaScript in your browser (using an extension like Web Developer) and see if critical content and links appear.

- Bulk Check with a Crawler

- Crawl the site without JavaScript rendering (e.g., Screaming Frog “Text Only” mode, JetOctopus without JS rendering, Sitebulb in HTML Crawler mode).

- Compare the results to a JavaScript-rendered crawl.

- Determine which pages rely heavily on JS for critical content.

- Validate Googlebot’s View of the Page

- Use the URL Inspection Tool in Google Search Console to see the rendered HTML and screenshot from Googlebot’s perspective.

- Use the Rich Results Test to view how Googlebot renders the page if GSC access is not available.

- Confirm that all critical content and internal links are present in the rendered HTML.

- Confirm Indexing of JS-Generated Content

- Use the site: search operator to check if JS-added content is indexed.

- Verify in Google Search Console’s URL Inspection Tool that JS-generated content appears in the rendered HTML.

- If content is not indexed, investigate why Google can’t see or process that JS-based content.

- Compare Source HTML vs. Rendered HTML

- Ensure critical content, links, and structured data appear in rendered HTML.

- If content only appears after user interaction (clicks, scrolling), adjust so that Googlebot can see it without action.

JavaScript SEO Implementation & Best Practices Checklist:

- Rendering & Content Delivery

- Server-Side Rendering (SSR) or Pre-Rendering: Provide fully rendered HTML to search engines.

- Progressive Enhancement: Ensure core content and critical elements are delivered in HTML first, with JavaScript enhancing functionality afterward.

- Avoid User-Interaction-Dependent Content: Ensure Googlebot can access all essential content without clicking or scrolling.

- Linking & Navigation

- Use Standard HTML <a> Tags: Don’t rely solely on JS-based links (javascript:void(0) or onclick) for important navigation. JS-based links are often a cause of poor crawling.

- Ensure Menus Are Crawlable: Navigation menus should not depend solely on JavaScript. Links should be present in the static HTML or be easily rendered into the DOM.

- Paginate with Real URLs: Provide paginated URLs like ?page=2 instead of infinite scroll or “Load More” buttons reliant on clicks.

- Redirects

- Use Server-Side (HTTP) Redirects: Prefer 301/302 redirects over JS-based redirects.

- If JS redirects are unavoidable, ensure they execute quickly and that Google can see them upon rendering.

- Resources & Blocking

- Check robots.txt: Do not block essential JS and CSS files. Googlebot must access these resources to render the page fully.

- Avoid Overly Aggressive Resource Blocking: Don’t disallow /js/ or /css/ directories if they contain critical rendering files.

- Performance & Optimization

- Minify & Compress JS: Reduce file sizes and remove unused code.

- Defer & Async Loading: Defer non-critical JS and load critical scripts asynchronously to improve page speed.

- Lazy-Load Non-Critical Elements: Use lazy loading for images, videos, or content below the fold.

- Status Codes & Error Handling

- Proper HTTP Status Codes: Return 404 for non-existent pages, not a JS-based “Page Not Found” message with a 200 status.

- Avoid Soft 404s: Make sure pages that should not be indexed return the correct status codes.

- Indexation Signals & Directives

- Noindex & Nofollow: Keep meta directives consistent in source and rendered HTML. Don’t rely on JS to remove a noindex tag.

- Canonical Tags: Ensure canonical tags are in the original HTML or properly rendered. Avoid conflicting signals between source and rendered HTML.

- URL Structure & Hashes

- Avoid Hash (#)-Based URLs for Main Content: Use clean, unique URLs without relying on hash fragments for different sections.

- If using a single-page application, utilize the History API to create crawlable, distinct URLs.

- Structured Data & Rich Results

- Ensure structured data is visible in rendered HTML.

- Validate structured data in the Rich Results Test.

- Testing & Continuous Monitoring

- Use Google Search Console: Regularly check the URL Inspection Tool for rendered output and indexing issues.

- Crawl Tools with JS Rendering (Screaming Frog, JetOctopus, Sitebulb): Compare source vs. rendered HTML and spot discrepancies.

- Page Speed Tools (Lighthouse, PageSpeed Insights): Identify JS-related performance bottlenecks.

- Log File Analysis: Check if Googlebot encounters issues fetching JS or CSS files.

The above steps should ensure you have a solid foundation for web infrastructure that is more accessible, crawlable, and indexable by search engines, ultimately improving the SEO performance of your site.

When to Seek Help:

- If critical content is missing in rendered HTML and developers can’t fix it.

- If complex dynamic rendering setups are causing indexing delays.

- If you suspect advanced JS frameworks or SPA configurations are hindering SEO performance.

If you need help with JS web optimization, get in touch.